The PVS boot process has always been a shifty recommendation, all to solve the problem of how best to get a bootstrap file to a bunch of VMs. For the most part it breaks down into three options: TFTP with DHCP options, PXE with TFTP, and Boot Device Manager (BDM). There are a lot of blogs and articles on this topic, so I won’t repeat the background. In particular, my colleague Nick has two posts (Load Balancing TFTP – Anything But Trivial and Load Balancing TFTP with NetScaler) which talk about the various options. You might want to read those articles first if this is a new topic or you need a quick refresher.

One configuration that doesn’t get enough appreciation or usage is TFTP Load Balancing using NetScaler 10.1. In the past, NetScaler wasn’t generally recommended since too much complexity was required to get a simple service to work. However, NetScaler 10.1 which was released mid-last year, now includes TFTP support (scroll two-thirds down for “The NetScaler appliance can now load balance TFTP servers”).

Doing a Google search for “PVS TFTP load balancing NetScaler 10.1” does not yield many Citrix results (although this blog covers a lot of this info), so the purpose of this article is to:

1) show how easy it is to set up TFTP load balancing through NS

2) talk about PVS boot process design options in today’s world

TFTP Load Balancing Configuration Steps

Load balancing is quite easy to configure on the NetScaler and involves the following steps:

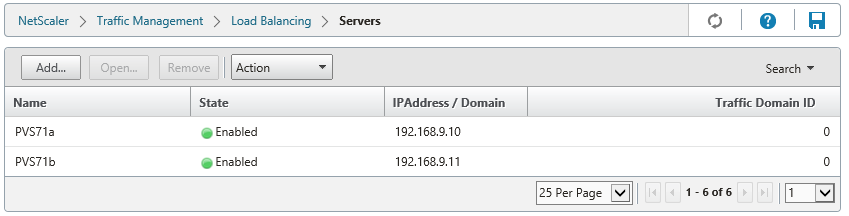

1 – Define PVS servers running the TFTP service

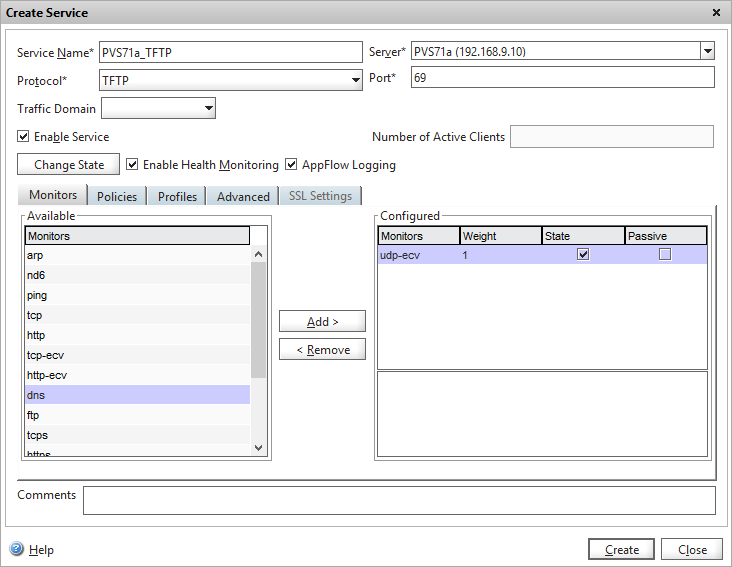

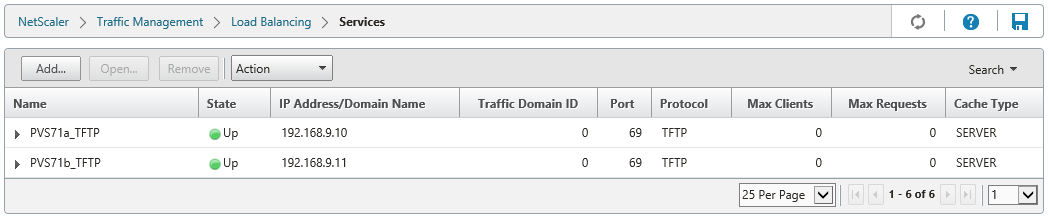

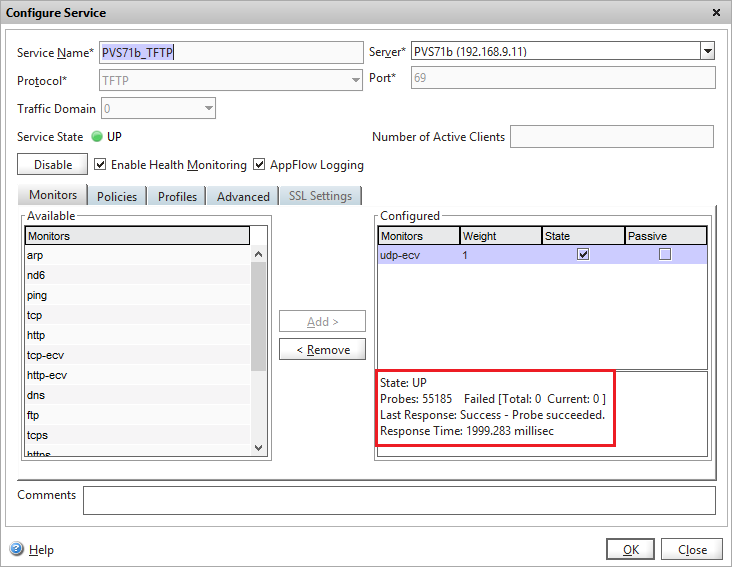

2 – Create a TFTP service for each server (note the “TFTP” protocol and port “69”)

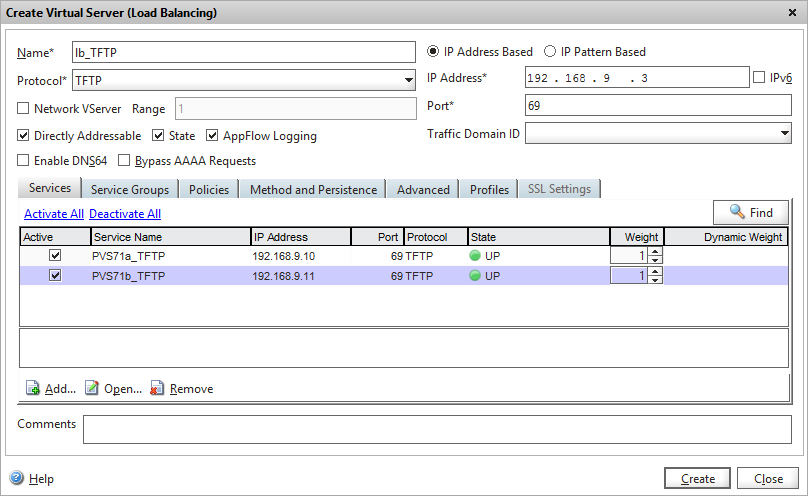

3 – Create a load balancing vServer for TFTP

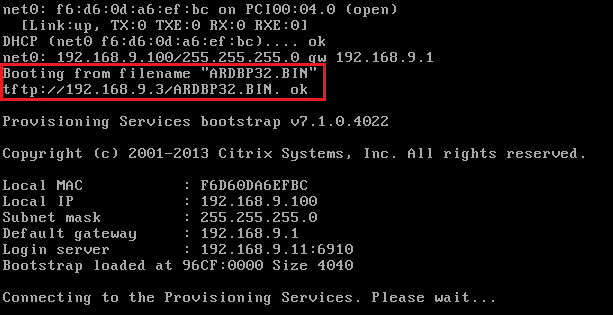

4 – Configure DHCP Options 66/67 for the appropriate scope containing PVS target devices

- Option 66: TFTP LB VIP (in this case 192.168.9.3)

- Option 67: ARDBP32.BIN

5 – Boot the PVS target device!

Monitoring TFTP

One key element of load balancing is the ability to intelligently monitor services to determine if they are in fact “UP”. NetScaler has functionality built in for key infrastructure components such as the XML service, XenDesktop Controllers and StoreFront. Unfortunately, there isn’t anything quite as purpose-built within NetScaler which would perform something like downloading and verifying the bootstrap.

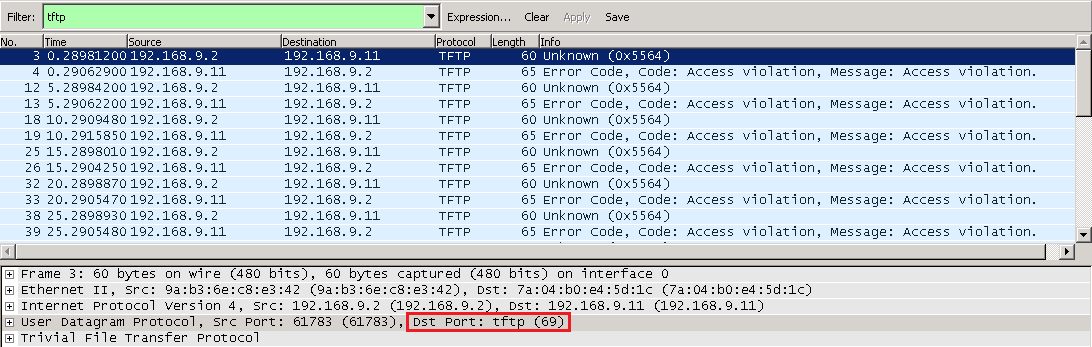

Since TFTP uses UDP, one applicable monitor here would be udp-ecv, which sends a UDP request over the service’s configured port and looks for a response. Here is a Wireshark output from the TFTP perspective; NetScaler by default every 5 seconds would send a request over UDP 69 to the service and would get a response. Yes, it receives an error from the TFTP service it wasn’t a valid request so this monitor isn’t 100% clean, but it does verify that the TFTP service is responding. Because TFTP is a fairly simple service (Wikipedia starts off by saying “TFTP is a file transfer protocol notable for its simplicity”), I would argue a monitor which receives a response from the TFTP service is sufficient.

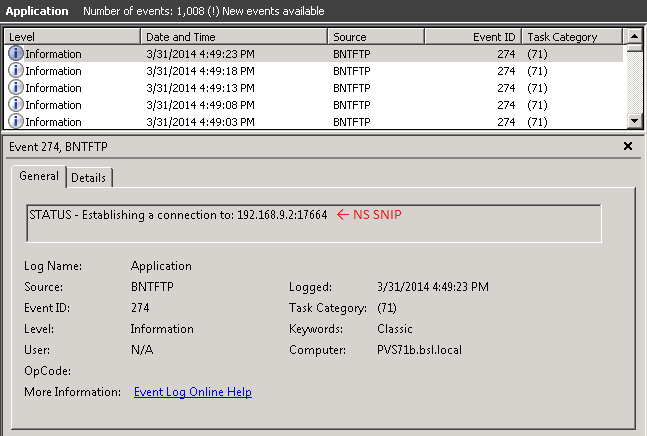

Wireshark probably makes this error look worse than it is; if we enable logging on the TFTP service (via C:\Program Files\Citrix\Provisioning Services\tftpcpl.cpl), then we can see each of these TFTP requests in the Windows Application Log. Here, the TFTP logging level was set to “Log All Events”, resulting in an Informational message appearing every 5 seconds showing communication to the NetScaler.

From the service properties, we can see that the udp-ecv monitor has probed this particular service 55,185 times and there are 0 failures, since the service is responding. Stopping the service or blocking the port would cause a failure and the service would report as down.

For those who want a “cleaner” or more exact TFTP monitor, it is possible to load a custom script to probe the TFTP service for the .bin file (/blogs/2011/01/06/monitoring-tftp-with-citrix-netscaler-revisited-pvs-edition/).

Which Direction?

So now that TFTP load balancing is a viable choice, what is the best boot method? Every design decision has a balance between complexity, cost, ongoing manageability, and high availability. Here is my list of pros and cons for the major contenders:

PXE + TFTP

- Pros

- Requires minimal server components and configurations

- High availability of PXE can exist as long as multiple PVS servers running the PXE service exist on the appropriate network

- Cons

- Can conflict with existing PXE services (or network isolation may be required)

- Can be undesirable to be running for a particular company

- PXE only listens on one port per PVS server, which may be a challenge for environments with target devices in multiple subnets

- Each PXE service points to its own TFTP service; in the event that a TFTP service goes down there will be a boot failure, even though multiple PVS servers can respond to the PXE requests

Boot Device Manager .iso

- Pros

- Alleviates dependencies on the network, since BDM can point to any specific IP or DNS name; target devices can be part of any VLAN or DHCP scope to point to the desired PVS farm

- Easy to configure

- Requires minimal components

- No PXE or TFTP service

- Does require the PVS Two-Stage Boot Service which only listens on 1 NIC

- BDM.iso located centrally (typically on a VMware datastore or CIFS share for XenServer)

- Cons

- BDM.iso must be mapped to the CD-ROM drive of each VM, which could affect manageability

- BDM has some scalability implications: ESXi 5.0 U2 and earlier only allows 8 hosts access to the .iso file on the VMFS datastore (ESXi 5.1 allows up to 32 hosts)

- Adding/removing TFTP services requires a change to the BDM.iso (although a DNS alias can be used to centrally manage IPs and perform round robin)

DHCP + TFTP LB with NS 10.1

- Pros

- Load balancing is finally a viable option in NS 10.1

- Since DHCP is already used (99% of the time), configuring DHCP options 66/67 is relatively easy for most environments

- Adding/removing TFTP services is done centrally from the NetScaler

- NetScaler can probe the TFTP service for availability

- Cons

- Requires internal NS running 10.1 to perform load balancing

- Target devices need to be in the correct VLAN/DHCP scope in order to get the options to hit the correct PVS farm

Conclusion

Along with a lot of things in the Citrix world, there is no single right answer and the best decision will depend for each organization. For me, if NetScaler already exists internally for load balancing, then NS TFTP load balancing is an easy add on to provide solid high availability for the PVS bootstrap. Otherwise, BDM and PXE+DHCP are fine options, too.

What are your thoughts? Post your comments below!