In this blog series I’m taking a look at scalability considerations for XenApp 6.5, specifically:

- How to estimate XenApp 6.5 Hosted Shared Desktop scalability

- What’s the optimal XenApp 6.5 VM specification?

- XenApp 6.5 Hosted Shared Desktop sizing example

My last post provided guidance on how to estimate XenApp density based on processor specification and user workload. In the second post of the series, I’m taking a look at the optimal specification for the XenApp virtual machines themselves.

In an ideal world, every project would include time for scalability testing so that the right number of optimally specified servers can be ordered. However, there are various reasons why this doesn’t always take place, including time and budgetary constraints. Architects are all too often asked for their best guess on the resources required. I’ve been in this situation myself and I know just how stressful it can be. If you over specify you’re going to cost your company money whilst under specifying reduces the number of users that can be supported, or even worse – impacts performance.

XenApp Server Virtual Machine Processor Specification

In most situations, testing has shown that optimal scalability is obtained when 4 virtual CPUs are assigned to each virtual machine. When hosting extremely resource intensive applications, such as computer aided design or software development applications, user density can sometimes be improved by assigning 6 or even 8 virtual CPUs to each virtual machine. However, in these situations consider using XenDesktop rather than XenApp so that you have a granular level of control over the resources that are assigned to each user.

Number of XenApp Servers per Virtualization Host

When determining the optimal number of virtual XenApp servers per virtualization host, divide the total number of virtual cores by the number of virtual processors assigned to each XenApp virtual machine (typically 4). For example, a server with 32 virtual cores should host 8 virtual XenApp servers (32 / 4 = 8). There is no need to remove server cores for the hypervisor because this overhead has been baked into the user density overheads discussed in the first blog.

One of the questions I get asked most is whether the total number of virtual cores includes hyper-threading or not. First, what is hyper-threading and what does it do?

The Citrix XenDesktop and XenApp Best Practices whitepaper states:

Hyper-threading is a technology developed by Intel that enables a single physical processor to appear as two logical processors. Hyper-threading has the potential to improve the performance of workloads by increasing user density per VM (XenApp only) or VM density per host (XenApp and XenDesktop). For other types of workloads, it is critical to test and compare the performance of workloads with Hyper-threading and without Hyper-threading. In addition, Hyper-threading should be configured in conjunction with the vendor-specific hypervisor tuning recommendations. It is highly recommended to use new generation server hardware and processors (e.g. Nehalem+) and the latest version of the hypervisors to evaluate the benefit of Hyper-threading. The use of hyper-threading will typically provide a performance boost of between 20-30%.

Testing has shown that optimal density is obtained when the total number of virtual cores includes hyper-threading. For example, a server with 16 physical cores should host 8 XenApp VMs – 32 virtual cores (16 physical cores x 2) / 4 virtual CPUs per XenApp virtual machine = 8 XenApp virtual machines.

A common mistake is to perform scalability testing with one virtual XenApp server and to multiply the results by the number of virtual machines that the host should be able to support. For example, scalability testing might show that a single XenApp server virtual machine can support 60 concurrent ‘Normal’ users. Therefore, a 16 physical core server with 8 XenApp server virtual machines should be able to support 480 concurrent users. This approach always overestimates user density because the number of users per virtual machine decreases with each additional XenApp VM hosted on the virtualization server. The optimal number of concurrent normal users for a 16 physical core server will be approximately 192 with around 24 users per virtual machine.

User Density per XenApp Server Virtual Machine:

The user density of each 4vCPU virtual XenApp server will vary according to the workloads that they support and the processor architecture of the virtualization host:

- Dual Socket Host: You should expect approximately 36 light users, 24 normal users or 12 heavy users per XenApp virtual machine.

- Quad Socket Host: You should expect approximately 30 light users, 20 normal users or 10 heavy users per XenApp virtual machine.

Depending on your worker group design, you may have a blend of light, normal and heavy users on each XenApp virtual machines. In these situations, adjust your density estimates based on the overhead of each workload type (light=1.5, normal = 1, heavy =0.5). For example:

Dual Socket Host –

- 30% light : (36 / 100) * 30 = 11

- 60% normal : (24 / 100) x 60 = 14 users

- 10% heavy : (12 / 100) * 10 = 1 user

Total number of users per XenApp virtual machine = 26

Memory Specification

The amount of memory assigned to each virtual machine varies according to the memory requirements of the workload(s) that they support. As a general rule of thumb, memory requirements should be calculated by multiplying the number of light users by 341MB, normal users by 512MB and heavy users by 1024MB (this number includes operating system overhead). Therefore, each virtual machine hosted on a dual socket host should typically be assigned 12GB of RAM and each virtual machine hosted on a quad socket host should be assigned 10GB of RAM.

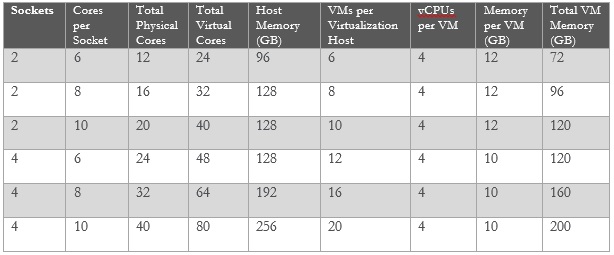

The following table shows typical memory specifications for each processor specification:

Don’t forget to allocate memory for the Hypervisor. With XenServer, this is 752MB by default.

Depending on hardware costs, it may make sense to reduce the number of XenApp server virtual machines per virtualization host. For example, instead of purchasing a virtualization host with 80 virtual cores and 256GB of memory you could reduce the number of XenApp server virtual machines per host from 20 to 19 so that only 192GB of memory will be necessary (2GB for the hypervisor). Although this reduces user density by approximately 30 light /20 normal/10 heavy users per host, it also saves 64GB of memory.

Depending on the hardware specification selected, you may find that your hardware specification allows you to assign more than 12GB of memory to each XenApp virtual machine. It makes sense to use all of the memory available.

Disk Input Output Operations per Second (IOPS)

Regardless of whether local or shared storage is used, the storage subsystem must be capable of supporting the anticipated number of IOPS. As a general rule of thumb, each light user requires an average of 2 steady state IOPS, each normal user requires an average of 4 steady state IOPS and each heavy user requires an average of 8 steady state IOPS. Therefore:

Dual Socket Host –

- Light users: 36 users x 2 IOPS = 72 steady state IOPS per XenApp virtual machine

- Normal Users: 24 users x 4 IOPS = 96 steady state IOPS per XenApp virtual machine

- Heavy users: 12 users x 8 IOPS = 96 steady state IOPS per XenApp virtual machine

Quad Socket Host –

- Light users: 30 users x 2 IOPS = 60 steady state IOPS per XenApp virtual machine

- Normal Users: 20 users x 4 IOPS = 80 steady state IOPS per XenApp virtual machine

- Heavy users: 10 users x 8 IOPS = 80 steady state IOPS per XenApp virtual machine

It is hard to estimate the number of IOPS generated per user during logon because the logon rate, logon actions and logon time varies significantly between implementations. Also, it is not possible to provide estimates based on user workload type because this is unrelated. Some businesses may experience the majority of their logons between 08:30 and 09:00 while others may have multiple shifts. Regardless, a hard disk write-cache on local (at least 1GB) and shared storage can be used to help soak up short periods of heavy disk utilization.

I realize that some of these recommendations can be hard to follow for first timers which is why the last post in this series will walk you through an example XenApp sizing exercise. Stay tuned!

For more information on recommended best practices when virtualizing Citrix XenApp, please refer to CTX129761 – Virtualization Best Practices.

Andy Baker – Architect

Worldwide Consulting

Desktop & Apps Team

Virtual Desktop Handbook

Project Accelerator

Follow @CTXConsulting