GPT-5 dropped last week, and the internet is having a moment.

“That’s it?”

“Where’s the AGI we were promised?”

“AI bubble confirmed!”

I woke up to a dozen messages asking if my timelines have changed, whether I’ve “over-rotated on AI,” and if I need to change my vision for Citrix. Taken together, they suggest I’d been “drinking the AI Kool-Aid” and ask if I was ready to admit it was all hype.

Let me be crystal clear: Even if all AI development stopped today—if every AI lab shut down and not a single new model was ever trained again—we’d still have 5+ years of transformation ahead of us just to integrate what already exists.

Reality check: today’s AI is already transforming knowledge work

I’ll put myself out there: I’m probably in the top 2-3% of knowledge workers when it comes to general AI usage. I leverage it for research, writing, brainstorming, analysis, planning—basically everything I do. And I can say with confidence that if the other 97% of global knowledge workers used AI the way I do today, it would fundamentally transform business. Full stop.

In fact here’s how I introduced my org chart at Citrix in a recent QBR: (TBH = “To Be Hired”)

But most people don’t use AI like I do. Most companies don’t either. Most IT departments are still debating whether or how to allow ChatGPT access while their advanced workers are already six tools deep into their shadow AI stack.

This post isn’t about GPT-5 or any future model. It’s about the massive gap between what’s possible with today’s AI and how knowledge workers are able to use it at work.

Look at what “ChatGPT” actually is today

People talk about ChatGPT like it’s still that simple chat app from 2022. It’s not. Today’s ChatGPT (or Claude, Gemini, etc.) is an entire ecosystem that include:

- Text, image, audio, and video generation and processing

- Web browsing and deep research capabilities

- Code execution environments: python, data analysis, complex computations

- Local desktop app integration with command line access, IDE integration, file system access, and automations

- Direct connectivity to modern SaaS apps via APIs and MCP, like Gmail, Calendar, Docs, Slack, HubSpot, Salesforce, etc.

- Computer-using capabilities: launching browsers, interacting with web apps, and navigating desktop apps, files, and general tasks. (BTW the leading CUAs now score 61% on the benchmarks, up from 45% when I wrote that post less than a month ago!)

- Scheduled and repeatable workflows for “set it and forget it” automation. (This is how I track the CUA agent leaderboard, for example.)

- Meeting recording and advanced voice modes for real-time transcription and interaction

- Project scaffolding: shared workspaces, custom GPTs, and persistent context

- Screen capture and recording: it can see what you see, assist you along the way, and remember and “recall” what you’ve seen

- Canvas for building generated workflows: a place to view & interact with generated code, docs, plans, etc.

- Memory and personalization, remembering you and what you’re working on across sessions

Thinking of these tools as just “chatbots” is comically inaccurate today. These are fully-fledged extensible platforms for knowledge work. (Dare I say “digital coworkers?”)

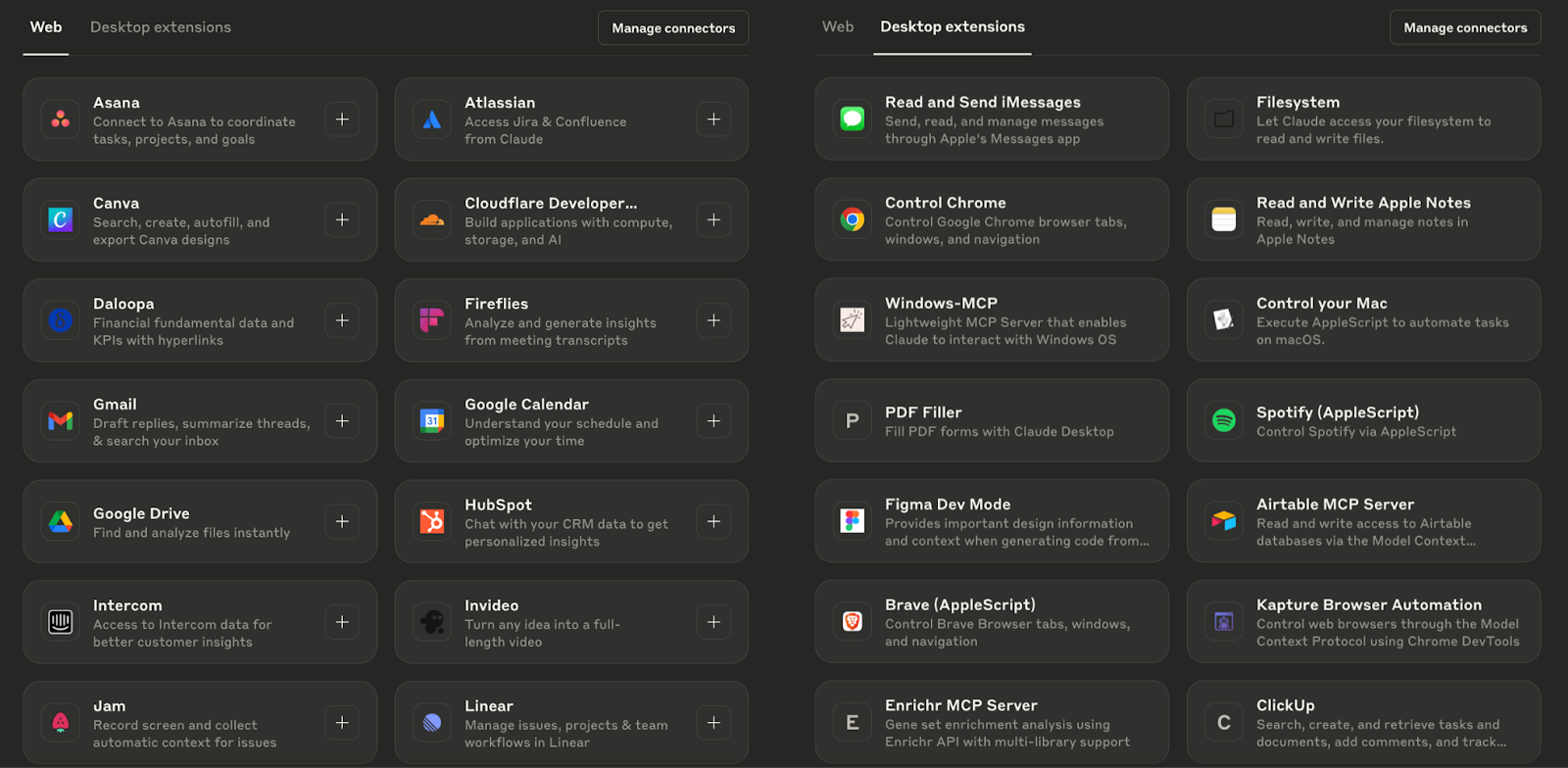

As another example, look at the various connectors the Claude desktop app has built-in, today:

New tools, connectors, and capabilities like these are being added to these platforms all the time, usually without requiring changes to the core LLM models. The capability for knowledge workers to use AI platforms to do real work exists today. This is not sci-fi, and not something we have to wait for.

The enterprise integration gap is massive

A disconnect exists between what’s possible and what companies allow. For example, even though Claude has the ability to connect to Gmail, Google Calendar, and Google Drive, most enterprises have security policies that prevent its connection to the corporate instances of these. So while the opportunity exists for workers to get the benefits of integrating their chosen AI tool to corporate apps, most enterprises need to sort out the various security and data boundaries. But the potential that exists today does not require updates to the core LLM models.

You can go down the list of connectors and imagine the same questions for each. What if every workers’ model of choice could access their proper corporate browser and workspace, not the generic one hosted by the model company? Same for the secure developer workspace, etc.

Of course enabling any of this would require that the AI platform operated within the standard corporate guardrails—DLP, security controls, audit logs, and the like—just like any human worker.

Each of these integrations represents months of work for enterprises: security reviews, policy development, governance frameworks, training, and change management. But any of these, once integrated, could provide real value to the company without any further advancements of AI needed.

Stop waiting for the next model

The constant chase for the next model is a distraction. It’s like holding off on deploying Windows 11 because you heard Windows 12 might be better.

The gap isn’t in AI capabilities. The gap is in enterprise readiness. While everyone’s debating whether AI is “truly intelligent” or waiting for AGI, the real work is figuring out:

- How to give AI secure access to corporate systems

- How to audit and govern AI actions

- How to blend human and AI workflows

- How to measure and optimize AI value

- How to train workers to leverage AI properly

- How to prevent shadow AI from becoming a security nightmare

None of this requires GPT-5, 6, or 10. It requires taking what we have seriously and doing the hard work of integration.

The Citrix view: Secure the work (no matter who’s doing it)

At Citrix, we’ve been thinking about this deeply. Not “what if AI becomes AGI?” but “what happens when thousands of AI agents need access to enterprise desktops?” Not “will AI replace workers?” but “how do we secure AI and human workers operating side-by-side?”

The answer isn’t to wait for AI to “mature” or for the hype to die down. It’s to build the infrastructure now for the AI-augmented workplace that’s already emerging.

That means:

- Workspaces that can host both human and AI workers

- Security that follows the work, not just the worker

- Governance that applies whether it’s a person or an AI clicking buttons

- Infrastructure that scales for 24/7 AI operations, not just 9-5 humans

The bottom line

GPT-5 could cure cancer tomorrow, and it wouldn’t change the fundamental challenge: enterprises have years of work ahead just to properly integrate today’s AI capabilities.

Stop worrying about whether AI is overhyped. Stop waiting for the next model. Stop debating AGI timelines. Start focusing on the very real, very immediate work of enabling and securing today’s AI platforms into your workplace.

Because while you’re waiting for the AI revolution to be “confirmed,” your workers are already living it. The only question is whether you’re going to acknowledge and support it or keep your head in the sand.

This next phase is the exciting part. The technology is here, the capabilities exist, and the real workplace AI transformation is about to begin. It’s going to be a wild ride.

Read more & connect

Join the conversation and discuss this post on LinkedIn. You can find all my posts on my author page on the Citrix blog (or via RSS).

Video of my most recent talk

In May I gave the closing keynote at the EUCtech Denmark 2025 conference, called The Future of Work in an AI-Native World. I talked about a lot of what I covered today and walked through how AI will evolve and impact the workplace in the coming years. You can watch it on YouTube.

My upcoming public talks

- AppManagEvent: Closing Keynote: AI & the Future of Enterprise Apps — Utrecht, Netherlands, Oct 10

- MAICON 2025: AI at Work: The Employees’ Revolution! — Cleveland, Ohio, Oct 14-16

AI Computing User Agent (CUA) skills benchmark progress

We got another an update since my last post, with the leading CUA now scoring 61%. (Humans score 72%.) What is this and why does it matter?