In our last Citrix on Azure TIPs webinar, Jeff Mitchell, Microsoft Cloud Solution Architect, and I shared lessons learned and the five key principles for Azure design. During the live Q&A many attendees had questions and comments regarding the Azure compute, network, and storage necessary to turn a design into a reality. While some questions were answered, our team wanted to continue to share the lessons learned during the last webinar with a deep dive on the building blocks necessary for a leading practices Citrix deployment on Microsoft Azure.

When preparing for an Azure deployment you first need to understand the resources available for the foundation of your design. At a high level, this foundation is comprised of your Azure subscription and the Resource Groups organizing the compute, network, and storage within your subscription.

Let’s explore Resource Groups and Azure Instances in greater detail:

Resource Groups

Resource Groups are containers for Azure IaaS and PaaS components. They group Azure resources and their associated costs as well as provide a mechanism for the granular application of Azure Role Based Access Control. We highlighted the importance of planning a granular Resource Group structure per Governance architecture design but what does this look like from a deployment standpoint?

Below you will see a sample set of Resource Groups for a Citrix on Azure Deployment:

There are a few key takeaways from the above image:

- Naming — Using a comprehensive naming scheme can improve organization and transparency allowing teams to understand who owns the resource, what is contained in the Resource Groups, and where they are located. Planning this upfront is critical as changing the naming after creation is not straight forward. I typically recommend a naming structure that contains at minimum the following details:System – Component – Environment – Locationa. System — What system do the resources belong to or who owns them. (Citrix, a specific application, core infrastructure, etc.)

b. Component — What is the role the resources play for the system?

c. Environment — Production, Staging, Dev with a number to account for multiple pods that may exist in your Azure region.

d. Location — The Azure datacenter. - Make Resource Groups for different Components — Since Resource Groups are leveraged to apply Azure RBAC, a granular set of Resource Groups will facilitate assigning least privilege to admins. For example, a “NetScaler” Resource Group if there is a separate team that owns those components or a “Storage” Resource Group if there is a Desktop team who may create and manage master images that are distributed via Citrix Machine Creation Services.

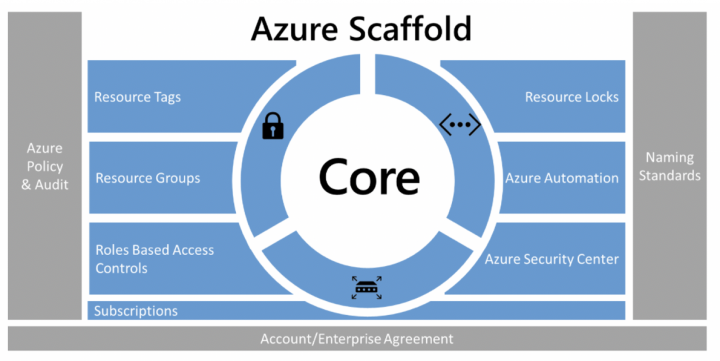

- Tagging can facilitate assigning resources to specific cost centers — Tags can be assigned to a Resource Group. There are a 15 tag name/value pairs per Resource or Resource Group which are commonly used to help track billing. Another common scenario is using tags to facilitate governance with Azure Policy & Audit from Azure enterprise scaffold – prescriptive subscription governance. If you require more than 15 pairs, a tag name can be 512 characters long and the tag value character limit is 256 which can be formatted as a JSON string.

Azure Instances — Citrix Workloads

The Citrix XenApp or VDI workloads will drive the majority of the Azure IaaS footprint in an Azure deployment. Optimizing the deployment and management of these workloads is critical to the TCO associated with an Azure Resource Location.

There are two types of payment mechanisms for Azure instances, Pay-as-you-go and Reserved Instances. Pay-as-you-go Instances are charged per hour, so power management of these workloads based on peak and off-peak times can create cost savings and promote greater efficiency. Azure 1-year and 3-year Reserved Instances is VM capacity purchased in advanced to reduce the $/hr costs associated with the desired instance type. This is a model is ideal for Citrix infrastructure and the minimum required, always-on capacity determined to support business needs identified during the design phase.

What is the best way to deploy and operationalize these different cost models? For example, during the design phase a specific use case requires 20% of the 100 instances required to support the use case be available at all times. Reserved instances are purchased to support this requirement. After you buy a Reserved VM Instance, the reservation discount is automatically applied to virtual machines matching the attributes and quantity of the reservation. Therefore, Citrix Smart Scale can leveraged to optimize the usage of a mix of Reserved and Pay-as-you-go instances for the same instance type. Smart Scale augments standard XenDesktop power management with load-based and schedule-based policies that can automate the power operations of a Delivery Group to optimize costs.

Within Smart Scale the default (or minimum number of machines) can be set to represent the Reserved Instances determined during the design to support a given use case. In the scenario above this would be 20%. Then using the load-based and additional scheduling for peak/off-peak hours, Pay-as-you-go instances can be optimized based on demand. This is illustrated in the diagram below.

In addition to Smart Scale, Azure Hybrid Benefit is also available for Windows Server based workloads and should be considered to reduce the cost of Azure compute by leveraging existing Windows Server licenses with Software Assurance.

Azure Instances — Citrix Infrastructure

Each Citrix component will leverage an associated virtual machine type in Azure. Each VM series available is mapped to a specific category of workloads (general purpose, compute-optimized, etc.) with various sizes controlling the resources allocated to the VM (CPU, Memory, IOPS, network, etc.).

In Citrix deployments we commonly see D-Series and F-Series VMs in production for the Citrix infrastructure components and XenApp/XenDesktop workloads, sized according to a customer’s projected concurrency and resource requirements, with D-Series being the most common. Why D-Series or F-Series? From a Citrix perspective most infrastructure components (Cloud Connectors, StoreFront, NetScaler, etc.) leverage CPU to run core processes. These VM types have a balanced CPU to Memory ratio, are hosted on uniform hardware (unlike A-Series) for more consistent performance, and support premium storage (more on that later).

The size and number of components within your infrastructure will always depends on your requirements, scale, and workloads. However with Azure we have the ability to scale dynamically and on-demand! For cost conscious customers, starting smaller and scaling up might be preferred.

I have created an example growth plan for Cloud Connectors supporting an Azure Resource Location. Please note this is for reference only, always monitor key resources such as CPU and Memory and scale accordingly if resource bottlenecks are encountered. Azure VMs require a reboot when changing size so this should be conducted during scheduled maintenance windows only and via established change control policies.

If you want to learn more, please join Jeff and me as we review the leading practices when deploying Citrix workloads on Microsoft Azure, including a live Q&A session! We will cover a deep dive on the deployment considerations for the Azure building blocks (subscription, compute, network, storage) Active Directory, user profiles, and business continuity in a free technical webinar on Thursday, April 19th at 9AM and 2PM EST.

Citrix Cloud and Microsoft Azure: Deployment Deep Dive — REGISTER TODAY!

Thank you,

Kevin Nardone

Enterprise Architect